Implementation and Testing

Iteration planning

Prioritizing features

- All applications have obvious dependencies between

features in terms of what needs what before it can be built

- If there is no game board you can't think about moving pieces on the board yet

- If there is no database persistence layer it would be a waste of time to work on saving the game state.

- The feature dependency graph has an edge from a feature to a feature it depends on: you can't get the feature running until all its dependencies are working.

- From the feature dependency graph there are multiple successful paths to building your final app over time: the onion of how it will appear layer by layer over time

- Reify the onion into a series of deadlines, regular (two-week for us) iterations with concrete goals of features/etc implemented in each successive layer/iteration

- Your job as a software developer is to find the most natural route through the dependency maze to your final app.

As an in-class example we will sketch an onion for the lights out app, its too simple an example but on the plus side we all know it

How to prioritize and divide responsibilities

- Define a subset of features to start with, the key features, which when implemented will give bare-bones functionality

- Since there are multiple people on a team, you need a "parallel programming plan" so you can proceed simultaneously with minimal blocking/conflicts.

- Make sure to "program to interfaces, not implementations" (a basic OO design principle) between team members: have a known meet-point

-- RESTful server APIs are one example, also distinct models and views in UI programming, distinct page views, etc.

Iterations

An iteration of a project is a planned global step in the development of a piece of software.- Its one layer in the onion above

- An iteration should not be too big: add some features, modify the design to do one aspect differently, etc.

- Iterations give you many little deadlines to sucessfully hit -- every two weeks in this class.

Planning your iterations

How to plan iterations in practice?

- An iteration plan maps feature implementation and other tasks on to which iteration they should be implemented in.

- Have (as in write out) a detailed plan for the next iteration and a fuzzier one for more distant iterations

- Continually revise your iteration plan as you go

- Maybe some things in the previous iteration proved too hard -- bump up to current iteration or divide into smaller problems over several future iterations.

- Make clear what the new set of features you want to add in the next iteration is - take your fuzzy ideas from the previous iteration plan and refine them.

- Use a tool to help write and keep the plan updated and for everyone to know the plan.

- There are many tools to help you do this now, Trello is a big one; also see ZenHub, kanban, waffle.io, etc

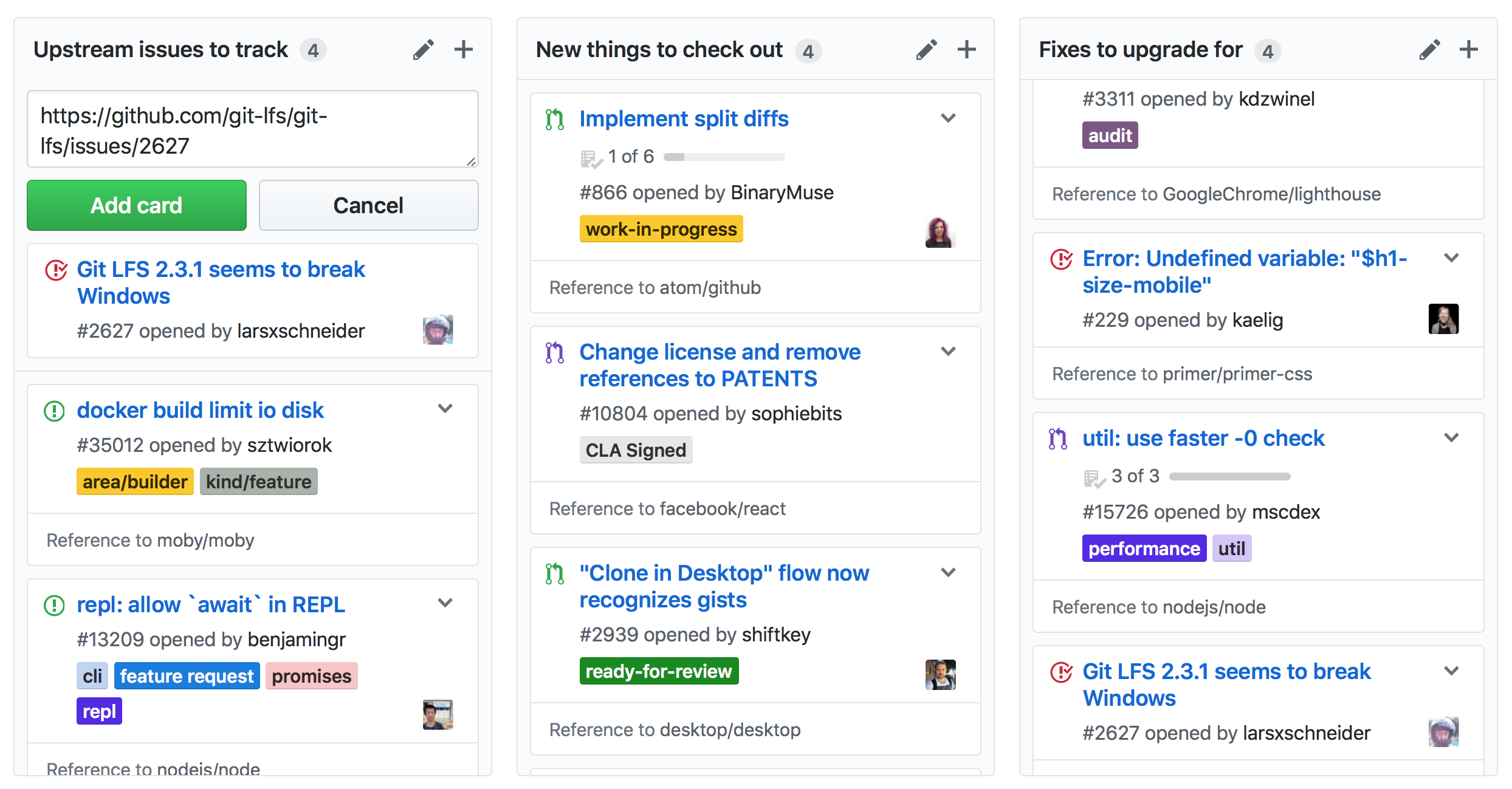

- For your projects you will be required to use GitHub's version, the Project boards, starting in iteration 3. A picture from their tutorial:

- Cards can be linked to GitHub issues in the issue tracker system - see key issues in graphical format, move between iterations by drag-and-drop.

- We will look at the project board of a past OOSE project

Releases and release terminology

This important topic seems to not get covered in any class? Here is a quick review.

A release is a stable iteration released to some users.

- version X.Y.Z (e.g. 10.8.3) means major version X, minor version Y, patch version Z.

- patches should only fix bugs, backward compatibility should not be broken (for users upgrading from older version). If a version number is X.Y only it is shorthand for X.Y.0.

- minor versions add new functionality which should also be backwards-compatible.

- major versions may make changes which are not backwards-compatible -- users need to change their code or adjust their workflow.

- a pre-release is not a stable version; suffixes are added, e.g. 10.8.3-pre or 8.3.23-alpha or 2.3.2-beta.

- alpha release (-alpha) - some features of eventual version haven't been implemented yet and there are quite a few bugs. More for in-house testing.

- beta release (-beta): the feature set is frozen and all features more or less work, but there are still bugs. Outside advanced users can use it.

- pre-release (-pre): a generic term for alpha or beta

- release candidate (-rc): better than beta; on deck for shipment, infintesimal bugs only

- Newer standard: bleeding edge/stable/old marked to note how buggy a release might be. Ubuntu's version is regular and LTS (long-term stable - will have bug patches for longer).

- Use git tags to tag certain commits with version numbers -- that code is the official release of that version.

- Version number less than 1: an application that has yet to get beyond alpha/beta/pre in any release.

- Rolling releases: release a new version every few weeks, not necessarily based on a features cluster

(Software products using this include Chrome, Rust, Firefox). - The above terms are not always used consistently; semver.org is an attempt to standardize terminology, see that link for guidance on good version numbering.

Implementation Principles

Here are some good principles for implementation, many of which are from the Agile school of thought.

Practice Collective Code Ownership

- Everyone can edit all code (for us that is via git)

- Humans are social animals; sharing code will better share ideas/concepts/solutions

- Allows refactoring to not be bounded--tweak others' code if you see a better way for it to interact with your code

- Aids in production of readable, elegant code (who wants to be hated by everyone else)

- But, it needs unit tests to work well -- if you tweak others' code, run their tests to make sure you didn't hose it.

- If you are not regularly pushing code you are "not playing well with others".

Commmunicate!

There are many levels on which you need to be communicating with your team, beyond collective code.

- Program to interfaces as mentioned above -- modularizes code AND responsibilities

- Use an issue tracker and an iteration planning tool to keep everyone on the same page (we are requiring use of these!)

- Make sure you have the right number of in-person meetings scheduled

- Pair program (discussed below) and/or have regular group coding sessions to increase joint understanding

- Use Slack or other IM client for low-overhead communication with your team

Continuous Integration (CI)

Build the whole system on a regular basis, don't work on a subcomponent in isolation for long- For your projects, a common "integration" is the front-end with back-end, and between big pieces of the back-end.

- Forces a methodology of many small changes (and small bugs to fix) as opposed to one big change that breaks the system and gives mega-bugs.

- You need to figure out how to break a big change into a series of small changes to get CI to work for you.

- A key feature of CI is run your tests every time you intergrate -- will catch integration bugs quickly.

- Tool support has recently increased for CI by running your tests automatically at each push to master.

-- tools for this are discussed below.

Have a coding standard

- This is a standard intro programming topic you should know aready; just make sure you are doing it.

- See the bottom of Assignment 1 for the standards for this course.

Program in pairs

Pair programming is two people programming on one terminal.

- Pair consists of driver & partner

- driver has the keyboard - ALL coding done in pairs

- Partner: corrects flaws in driver

- syntax

- conceptual

- asks questions of driver about code

- Driver:

- has final say (they have the keyboard)

- can focus on code details since parter is on the concepts

Advantages:

- One brain has attention to "concepts", the other to "details" -- specializing in a way that makes a "superbrain" better than two individual brains trying to hold both at once.

- You get the best qualities of two people - some are better at concepts/critiquing and some better at details/typing.

- Rapidly train new people (intense exposure), improves mutual understanding/communication

- Leverage that "humans are social animals" aspect again

Conclusion: works well with some personalities on some projects some or all of the time. Give it a test-drive if you have not tried it before.

Version Control

(This is a key topic of implementation but we already had a separate lecture on git! See the course git page)

Testing

Testing is a major component of commercial software development.

- All code is obviously tested before shipping, the question is how thoroughly/frequently/automatically it is tested.

- A test suite is a set of tests which can be automatically run and the code either passes or fails.

- If you don't take a methodological approach to

automated testing it is very difficult to develop large pieces of software

-- you need about 5x an OOSE project in size before you are char-broiled without good tests. - Writing code to be automatically testable also requires you to make better interfaces and naturally leads to much better code

-- the rewards are beyond the tests themselves. - Testing also makes refactoring (next lecture) much less daunting - you can super quickly verify your change didn't break your app.

- Conclusion: JUST DO IT! To help you along the path, its 25% of iteration 4's grade.

Testing Hierarchies

Here are some common rough classes of tests

- Unit tests (low-level operations of one component)

- UI tests (tests of the user interface)

- Integration tests (tests of how components interact)

- Acceptance tests (at the level of features / use-cases)

Unit Testing

Write small tests for each nontrivial operation

- Test suites (sets of tests) should be automatically executable.

- Each test returns either true (success) or false (fail) always.

- Initially a "unit" test meant you test each class in complete isolation (mock any objects it interacts with), but today this is rarely practiced

- Methodology: re-run the complete unit test suite after any significant change, and immediately debug to get test success back to 100%

- Code bases with thorough test coverage are much, much (much, much, much, ...) more reliable and debuggable

-- stamp out bugs immediately on the code you just wrote - You are required to implement unit tests by iteration 4.

- In lecture we will review our own Lights Out unit test suite; the To Do app also has a super simple unit test suite if you want an example to look at on your own.

Unit testing in Java Python Swift etc

- Every good development environment today comes with a good way to unit test

- If you are using Java, use JUnit

- Same story for other languages: e.g. PyUnit for Python; Rails and Swift have nice built-in testing frameworks; etc.

Test Coverage

- There is no need for tests that completely overlap in terms of bugs they would catch: quality over quantity.

- If there is some special case the code should work on, document this by writing a test for it.

- If you think an operation could fail, write a test to make

sure you are catching it

(e.g. is reading past the end of file caught?) - Add tests before refactoring to make sure you can verify the success of the refactoring afterwards

- Cover bugs with tests (i.e. add a test that would have failed given the bug you just found; prevents recurring bugs)

Test Coverage Tools

- Code coverage tools compute which lines of code are run by your test suite

- If a lot of lines are never run by any test you have bad test coverage

- Excellent coverage of code by line is an important dimension of overall test coverage

- Even with excellent line coverage you can have bad test coverage, its only based on line of code not value of variable

- For Java, a common coverage tool is JaCoCo which has an Eclipse plugin, EclEmma; IntelliJ has its own code coverage tool built-in.

- In lecture We will run the IntelliJ code coverage tool on the Lights out app unit test suite. (Note the IntelliJ coverage tool doesn't seem to be compatible with Maven)

Acceptance testing

- Acceptance tests are roughly tests corresponding to use-cases: one per use-case.

- They should test the customer-facing side of the app: is it "acceptable" to customers?

Behavior-Driven Development

BDD is a relatively new approach to acceptance testing

- BDD-style acceptance tests start off in a format similar to a use-case scenario (a story): a linear sequence of steps in English.

- A precise mapping of that English on to code is defined.

- So, its "just acceptance tests" but you don't have to stare at a pile of code to decypher the test.

- BDD slowly is gaining acceptance and may be mainstream eventually

Integration Testing

- Integration tests are similar to acceptance tests - they also test the whole system

- But, integration tests are low-level things about whole system, not customer-facing

- Example: the Lights Out Postman script is integration testing the RESTful protocol

- If we were testing the front-end by automatically clicking on buttons that would be an acceptance test: "is the whole app performing acceptably?"

CI Services for integration (and unit/integration) testing

- One challenge of CI is individual developers have different build setups on their laptops

- A relatively recent solution is to use a CI service to run your build on a blank box and then run all your tests.

- The CI service defines the "gold standard" of success or fail of tests.

- The CI service requires a fully automated build and test process as a prerequisite

-- you should have this anyway; putting a CI service in the loop forces this - We will use Travis-CI, a CI service company intergrated with GitHub. For commercial repos use travis-ci.com, and use travis-ci.org for public repos

- Why Github and Travis together? For every push to master, Travis gets notified and builds project and runs your test suite!

- Jenkins is a service similar to Travis which you must run yourself on your own computer.

- You are required to use Travis for your projects unless it is completely infeasible; see OOSE Tools page for more information

- CI servers can also deploy for you, e.g. to Heroku.

- Its harder to test front-ends on CI servers and we don't require that for your projects.

We will show Travis-CI in action running the tests of Leandro's implementation of the Lights Out app.

Testing UIs

- Its hard to automate the input aspects of forms, scrollbars, etc.

- But things keep improving in terms of tools, and eventually it should be commonplace.

- Android -- see Android UI testing best practices for more details.

- Javascript Web front-ends -- see e.g. TestCafe for programmatic testing of JavaScript web front-ends.

- You are encouraged, but not required, to perform front-end tests on your projects.

- Unit tests are for one component only; how to deal with missing components you may be interacting with? Mock them up; see e.g. mockito for a framework to help with Java mocking, etc.

Testing apps with databases and distribution

You need to work harder to get some features automatically tested.- RESTFul servers: test at the level of the RESTFul API; its more challenging to test potential conflicts with multiple users but such testing is critical in industry-strength apps.

- Persistence layers: manually set up a fixed initial database configuration before running the tests.

For your projects we primarily expect unit and integration tests on your servers, but it varies by project as some of you have little or not RESTful server component; work with your advisor on what testing is needed.

Iterations 3-6

We will briefly review Iteration 3 for the specific requirements.